Transfer learning is where Bayesian optimization gets interesting

Transfer learning is where Bayesian optimization gets interesting.

In theory, reusing past experiments should always help. In practice, transfer learning is one of the fastest ways to introduce hidden bias into an optimization loop, which can either be good or bad, depending on the context.

Chemistry, materials, and process systems almost always require optimization in many different dimensions simultaneously, and assumptions about similarity can be difficult.

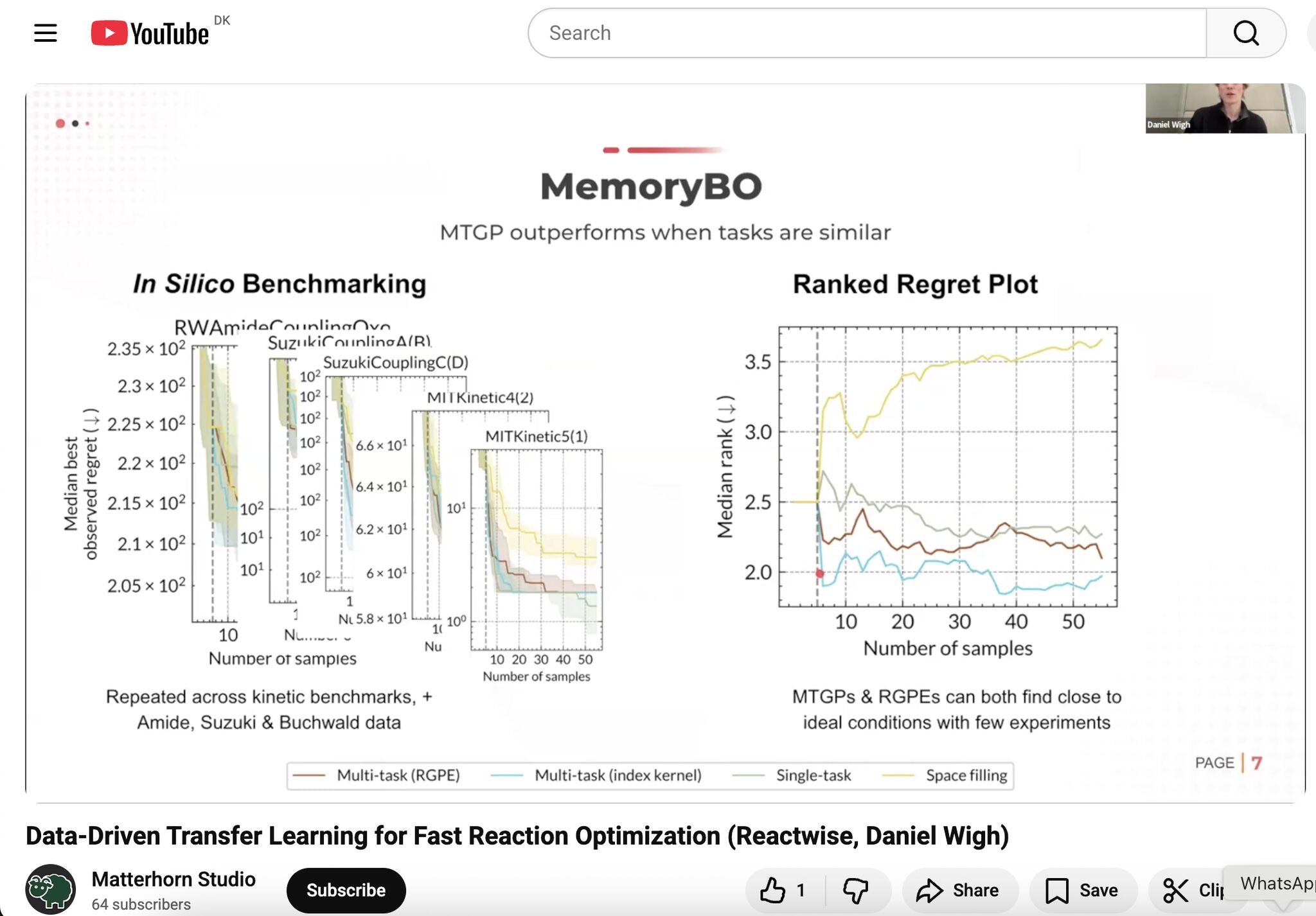

One strategy is to explicitly tie tasks together. Multi-task Gaussian Processes (MTGPs) do this by learning a shared representation across systems. When reactions or processes are closely related, this can dramatically speed up learning. When they aren’t, the model has no way to admit uncertainty about that assumption, and performance can quietly degrade.

Another strategy is to stay agnostic about similarity. Ranked Gaussian Process Ensembles (RGPEs) evaluate how well prior models generalize to a new system and let that evidence determine how much influence they have. Prior data can help early, then fade out as the model sees more task-specific measurements. This makes the approach more forgiving when prior experiments only partially apply.

ReactWise's CTO, Daniel Wigh, recently spoke about this trade-off at Active Learning UK, an online symposium about Bayesian optimization, thanks Jakob Zeitler for the invite! We performed evaluations with our own experimental data generated at ReactWise, and showed that doing transfer learning the right way can have a massive impact.

The 7 min talk is now up on YouTube - check it out here!